Question 101

The business reporting tem requires that data for their dashboards be updated every hour. The total processing time for the pipeline that extracts transforms and load the data for their pipeline runs in 10 minutes.

Assuming normal operating conditions, which configuration will meet their service-level agreement requirements with the lowest cost?

Assuming normal operating conditions, which configuration will meet their service-level agreement requirements with the lowest cost?

Question 102

A Delta Lake table in the Lakehouse named customer_parsams is used in churn prediction by the machine learning team. The table contains information about customers derived from a number of upstream sources.

Currently, the data engineering team populates this table nightly by overwriting the table with the current valid values derived from upstream data sources.

Immediately after each update succeeds, the data engineer team would like to determine the difference between the new version and the previous of the table.

Given the current implementation, which method can be used?

Currently, the data engineering team populates this table nightly by overwriting the table with the current valid values derived from upstream data sources.

Immediately after each update succeeds, the data engineer team would like to determine the difference between the new version and the previous of the table.

Given the current implementation, which method can be used?

Question 103

A junior data engineer has been asked to develop a streaming data pipeline with a grouped aggregation using DataFrame df. The pipeline needs to calculate the average humidity and average temperature for each non-overlapping five-minute interval. Events are recorded once per minute per device.

df has the following schema: device_id INT, event_time TIMESTAMP, temp FLOAT, humidity FLOAT Code block:

df.withWatermark("event_time", "10 minutes")

.groupBy(

________,

"device_id"

)

.agg(

avg("temp").alias("avg_temp"),

avg("humidity").alias("avg_humidity")

)

.writeStream

.format("delta")

.saveAsTable("sensor_avg")

Which line of code correctly fills in the blank within the code block to complete this task?

df has the following schema: device_id INT, event_time TIMESTAMP, temp FLOAT, humidity FLOAT Code block:

df.withWatermark("event_time", "10 minutes")

.groupBy(

________,

"device_id"

)

.agg(

avg("temp").alias("avg_temp"),

avg("humidity").alias("avg_humidity")

)

.writeStream

.format("delta")

.saveAsTable("sensor_avg")

Which line of code correctly fills in the blank within the code block to complete this task?

Question 104

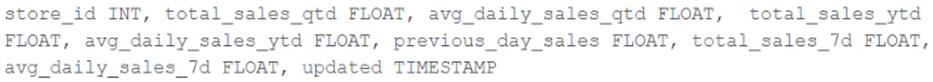

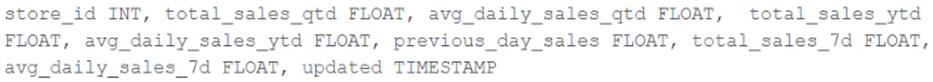

The business intelligence team has a dashboard configured to track various summary metrics for retail stories. This includes total sales for the previous day alongside totals and averages for a variety of time periods. The fields required to populate this dashboard have the following schema:

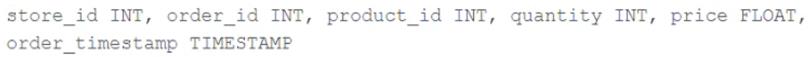

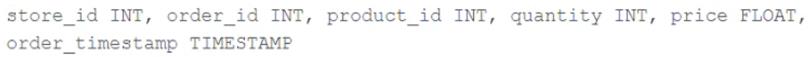

For Demand forecasting, the Lakehouse contains a validated table of all itemized sales updated incrementally in near real-time. This table named products_per_order, includes the following fields:

Because reporting on long-term sales trends is less volatile, analysts using the new dashboard only require data to be refreshed once daily. Because the dashboard will be queried interactively by many users throughout a normal business day, it should return results quickly and reduce total compute associated with each materialization.

Which solution meets the expectations of the end users while controlling and limiting possible costs?

For Demand forecasting, the Lakehouse contains a validated table of all itemized sales updated incrementally in near real-time. This table named products_per_order, includes the following fields:

Because reporting on long-term sales trends is less volatile, analysts using the new dashboard only require data to be refreshed once daily. Because the dashboard will be queried interactively by many users throughout a normal business day, it should return results quickly and reduce total compute associated with each materialization.

Which solution meets the expectations of the end users while controlling and limiting possible costs?

Question 105

When scheduling Structured Streaming jobs for production, which configuration automatically recovers from query failures and keeps costs low?