Question 11

A dataset has been defined using Delta Live Tables and includes an expectations clause:

1. CONSTRAINT valid_timestamp EXPECT (timestamp > '2020-01-01')

What is the expected behaviour when a batch of data containing data that violates these constraints is

processed?

1. CONSTRAINT valid_timestamp EXPECT (timestamp > '2020-01-01')

What is the expected behaviour when a batch of data containing data that violates these constraints is

processed?

Question 12

An upstream system is emitting change data capture (CDC) logs that are being written to a cloud object storage directory. Each record in the log indicates the change type (insert, update, or delete) and the values for each field after the change. The source table has a primary key identified by the fieldpk_id.

For auditing purposes, the data governance team wishes to maintain a full record of all values that have ever been valid in the source system. For analytical purposes, only the most recent value for each record needs to be recorded. The Databricks job to ingest these records occurs once per hour, but each individual record may have changed multiple times over the course of an hour.

Which solution meets these requirements?

For auditing purposes, the data governance team wishes to maintain a full record of all values that have ever been valid in the source system. For analytical purposes, only the most recent value for each record needs to be recorded. The Databricks job to ingest these records occurs once per hour, but each individual record may have changed multiple times over the course of an hour.

Which solution meets these requirements?

Question 13

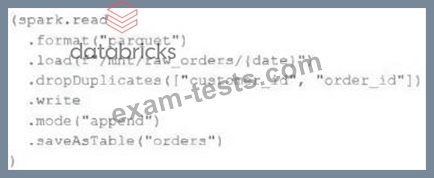

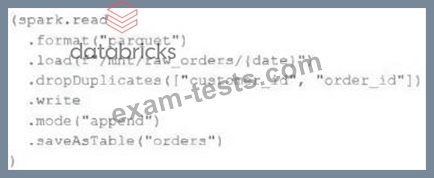

An upstream source writes Parquet data as hourly batches to directories named with the current date. A nightly batch job runs the following code to ingest all data from the previous day as indicated by thedatevariable:

Assume that the fieldscustomer_idandorder_idserve as a composite key to uniquely identify each order.

If the upstream system is known to occasionally produce duplicate entries for a single order hours apart, which statement is correct?

Assume that the fieldscustomer_idandorder_idserve as a composite key to uniquely identify each order.

If the upstream system is known to occasionally produce duplicate entries for a single order hours apart, which statement is correct?

Question 14

A junior data engineer is migrating a workload from a relational database system to the Databricks Lakehouse.

The source system uses a star schema, leveraging foreign key constrains and multi-table inserts to validate records on write.

Which consideration will impact the decisions made by the engineer while migrating this workload?

The source system uses a star schema, leveraging foreign key constrains and multi-table inserts to validate records on write.

Which consideration will impact the decisions made by the engineer while migrating this workload?

Question 15

Assuming that the Databricks CLI has been installed and configured correctly, which Databricks CLI command can be used to upload a custom Python Wheel to object storage mounted with the DBFS for use with a production job?